The biggest feature of Go language is that it supports concurrency (Goroutine) from the language level. Goroutine is the most basic execution unit in Go. In fact, every Go program has at least one Goroutine: the main Goroutine. It is created automatically when the program starts. In this article, we will talk about the the detail of goroutine, including what is goroutine, coroutine, how are goroutine get scheduled, how to managemanet goroutine, and use channel for communication and syncing.

What is goroutine?

In order to better understand Goroutine, let’s talk about the concepts of threads and coroutines.

- Thread: Sometimes called a lightweight process (LWP), it is the smallest unit of program execution flow. A standard thread consists of a thread ID, a current instruction pointer (PC), a register set and a stack. In addition, a thread is an entity in the process and is a basic unit that is independently scheduled and dispatched by the system. The thread itself does not own system resources, only some resources that are essential for operation, but it can be combined with other resources that belong to the same process. Threads share all resources owned by the process.Threads have their own independent stack and shared heap. The heap is shared but the stack is not shared. Thread switching is generally scheduled by the operating system.

- Coroutine: Also known as micro-thread. Like subroutines (or functions), coroutine is also a program component. Compared with subroutines, coroutines are more general and flexible, but are not as widely used in practice as subroutines.

Similar to threads, the heap is shared but the stack is not shared. The switching of coroutines is generally controlled explicitly by the programmer in the code. It avoids the extra cost of context switching, takes into account the advantages of multi-threading, and simplifies the complexity of highly concurrent programs.

Goroutine and coroutine in other languages are similar in usage, but different in literal sense (one is Goroutine and the other is coroutine). Furthermore, coroutine is a cooperative task control mechanism. In the simplest sense, On the other hand, coroutines are not concurrent, but Goroutine supports concurrency. Therefore Goroutine can be understood as a coroutine of Go language. It can run on one or more threads at the same time.

let’s take an example:

func loop() {

for i := 0; i <; i++ {

fmt.Printf("%d ", i)

}

}

func main() {

go loop()

loop()

}How are goroutine scheduled?

Go uses the concept of user-level lightweight threads or co-routine-like to solve concurrency problems, which Go calls goroutine. The resources occupied by goroutine are very small and lightweight, only a few KB, and these few KB are enough for the goroutine to run completely, which can support a large number of goroutines in a limited memory space and support more concurrency. The switching of goroutine scheduling does not need to go deep into the operating system kernel layer to complete, and the cost is very low. Therefore, thousands of concurrent goroutines can be created in a Go program. All Go code is executed in goroutine, even go’s runtime (running function) is no exception. The program that puts these goroutines on the “CPU” for execution according to a certain algorithm is called a goroutine scheduler.

Go provides easier-to-use concurrency methods using goroutines and channels. Goroutine comes from the concept of coroutine, which allows a set of reusable functions to run on a set of threads. Even if a coroutine blocks, other coroutines of the thread can be scheduled by the runtime and transferred to other runnable threads. The most critical thing is that programmers cannot see these low-level details, which reduces the difficulty of programming and provides easier concurrency.

For the operating system, a Go program is just a user-level program. For the operating system, it only has threads in its eyes, and it does not even know that there is anything called Goroutine. The scheduling of goroutines must be completed by Go itself to achieve fair competition for CPU resources between goroutines within the Go program. This task falls on the Go runtime. You must know that in a Go program, in addition to user code, the rest is go runtime.

So the Goroutine scheduling problem evolved into how the go runtime schedules many goroutines in the program to run on CPU resources according to a certain algorithm. At the operating system level, the “CPU” resource that Thread competes for is the real physical CPU, but at the Go program level, the CPU resource that each Goroutine competes for is the operating system thread. In this way, the task of Go scheduler is clear: put goroutines into different operating system threads for execution according to a certain algorithm. This kind of scheduler that comes with the language level is called native language support for concurrency.

The GMP Model

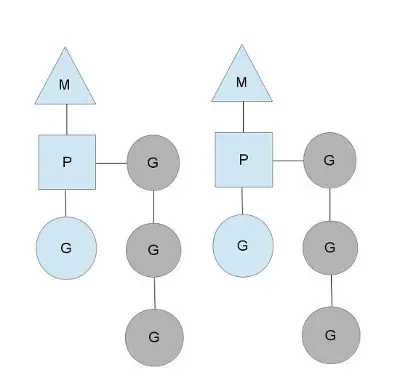

P is a “logical Processor”. In order for each G to actually run, it first needs to be assigned a P. For G, P is the “CPU” that runs it. It can be said that G only has P in its eyes. But from the perspective of Go scheduler, the real “CPU” is M. Only by binding P and M can G in P’s runq actually run. This relationship between P and M is like the corresponding relationship between user threads and kernel threads at the scheduling level of the Linux operating system (N x M).

- G: represents goroutine, which stores goroutine’s execution stack information, goroutine status, goroutine’s task function, and binding to P where it is located. G objects can be reused.

- P: represents logical processor. The number of P determines the maximum number of parallel Gs in the system (premise: the number of physical CPU cores of the system >= the number of P). P also plays a very important role in the various G object queues, linked lists, some caches and states it owns. P manages a set of goroutine queues. P will store the context of the current goroutine running (function pointer, stack address and address boundary). P will do some scheduling for the goroutine queues it manages (such as goroutines that take up a long CPU time). Pause, run subsequent goroutine, etc.) When its own queue is finished consuming, it will go to the global queue to get the task. If the global queue is also finished consuming, it will go to other P’s queue to grab the task.

- M: M stands for machine, which represents the real execution computing resource. M is the virtualization of the operating system kernel thread by the Go runtime. M and the kernel thread generally have a one-to-one mapping relationship. A goroutine will eventually be executed on M. * After binding a valid p, enter the schedule loop; the mechanism of the schedule loop is to obtain G from various queues and the local queue of p, switch to the execution stack of G and execute the function of G, and call goexit to clean up Work and return to m, and so on. M does not retain the state of G, which is the basis for G to be scheduled across M.

P and M generally have a one-to-one correspondence. Their relationship is: P manages a group of G mounts running on M. When a G is blocked on an M for a long time, the runtime will create a new M, and the P where the blocked G is located will mount other Gs on the newly created M. When the old G is blocked or is considered dead, the old M is recycled.

The number of P is set through runtime.GOMAXPROCS (maximum 256). After Go1.5 version, it defaults to the number of physical threads. When the amount of concurrency is large, some P and M will be added, but not too much. If the switching is too frequent, the gain outweighs the loss.

In terms of thread scheduling alone, the advantage of the Go language compared to other languages is that OS threads are scheduled by the OS kernel, and goroutines are scheduled by the Go runtime (runtime) own scheduler. This scheduler uses a program called m:n scheduling technology (multiplexing/scheduling m goroutines to n OS threads). One of its major features is that goroutine scheduling is completed in user mode, and does not involve frequent switching between kernel mode and user mode, including memory allocation and release. A large memory pool is maintained in user mode. Directly calling the system’s malloc function (unless the memory pool needs to be changed) is much less expensive than scheduling OS threads. On the other hand, it makes full use of multi-core hardware resources, approximately evenly distributes several goroutines on physical threads, and coupled with the ultra-light weight of its own goroutines, the above guarantees the performance of go scheduling.

Creating Goroutines

Goroutines in Go are lightweight threads that run concurrently. To create a goroutine, follow these steps:

1.1 Use the go keyword

In Go, you can create a new goroutine using the go keyword followed by a function call. For example:

go myFunction()1.2 Passing arguments

You can also pass arguments to the goroutine function:

go func(arg1 int, arg2 string) {

// Your code here

}(value1, value2)Section 2: Stopping Goroutines

Stopping goroutines can be tricky, and you should avoid abruptly terminating them. Instead, use channels for graceful goroutine termination:

2.1 Using channels

Create a channel that can be used to signal the goroutine to stop. Here’s an example:

stopChan := make(chan bool)

go func() {

for {

select {

case <-stopChan:

return // Stop the goroutine

default:

// Your goroutine logic here

}

}

}()

// To stop the goroutine

stopChan <- true2.2 Using context

Another approach is to use the context package, which provides a way to carry deadlines, cancellations, and other values across API boundaries:

ctx, cancel := context.WithCancel(context.Background())

go func() {

select {

case <-ctx.Done():

return // Stop the goroutine

default:

// Your goroutine logic here

}

}()

// To stop the goroutine

cancel()Waiting for Goroutines

To ensure that your main program waits for all goroutines to complete, you can use the sync package:

3.1 WaitGroups

A sync.WaitGroup is a helpful tool for waiting for multiple goroutines to finish. You can use it as follows:

var wg sync.WaitGroup

for i := 0; i < 3; i++ {

wg.Add(1)

go func(id int) {

defer wg.Done()

// Your goroutine logic here

}(i)

}

// Wait for all goroutines to finish

wg.Wait()3.2 Channels for synchronization

You can also use channels to synchronize goroutines. For example, you can use a channel to signal when a goroutine has finished its work:

results := make(chan int)

for i := 0; i < 3; i++ {

go func(id int) {

// Your goroutine logic here

results <- id

}(i)

}

// Collect results

for i := 0; i < 3; i++ {

<-results

}