Sahara tutorial for openstack liberty and mitaka

This tutorial includes following parts:

- sahara installation

- cluster configuration

- job configuration

- run map reduce job

Before you start, you should have an openstack cluster working fine on Ubuntu 14.04

Perform all those steps on openstack controller node, in my environment, controller node

hostname is controller1.

1, install python virtual environment packages

apt-get install python-setuptools python-virtualenv python-dev libffi-dev libssl-dev

2, Setup a virtual environment for sahara

virtualenv sahara-venv

3, install pytz

sahara-venv/bin/pip install pytz

4, install the sahara packages from pypi:

sahara-venv/bin/pip install 'http://tarballs.openstack.org/sahara/sahara-stable-liberty.tar.gz'

or

sahara-venv/bin/pip install 'http://tarballs.openstack.org/sahara/sahara-master.tar.gz'

5, create a configuration file from the sample file

mkdir sahara-venv/etc cp sahara-venv/share/sahara/sahara.conf.sample-basic sahara-venv/etc/sahara.conf

6, modify sahara.conf as follows

[DEFAULT] # Hostname or IP address that will be used to listen on. # (string value) #host= # Port that will be used to listen on. (integer value) #port=8386 # If set to True, Sahara will use floating IPs to communicate # with instances. To make sure that all instances have # floating IPs assigned in Nova Network set # "auto_assign_floating_ip=True" in nova.conf.If Neutron is # used for networking, make sure that all Node Groups have # "floating_ip_pool" parameter defined. (boolean value) use_floating_ips=true # Use Neutron or Nova Network (boolean value) use_neutron=true # Use network namespaces for communication (only valid to use in conjunction # with use_neutron=True) use_namespaces=true infrastructure_engine=direct # Maximum length of job binary data in kilobytes that may be # stored or retrieved in a single operation (integer value) #job_binary_max_KB=5120 # Postfix for storing jobs in hdfs. Will be added to # /user/hadoop/ (string value) #job_workflow_postfix= # enable periodic tasks (boolean value) #periodic_enable=true # Enables data locality for hadoop cluster. # Also enables data locality for Swift used by hadoop. # If enabled, 'compute_topology' and 'swift_topology' # configuration parameters should point to OpenStack and Swift # topology correspondingly. (boolean value) #enable_data_locality=false # File with nova compute topology. It should # contain mapping between nova computes and racks. # File format: # compute1 /rack1 # compute2 /rack2 # compute3 /rack2 # (string value) #compute_topology_file=etc/sahara/compute.topology # File with Swift topology. It should contains mapping # between Swift nodes and racks. File format: # node1 /rack1 # node2 /rack2 # node3 /rack2 # (string value) #swift_topology_file=etc/sahara/swift.topology # Log request/response exchange details: environ, headers and # bodies. (boolean value) #log_exchange=false # Print debugging output (set logging level to DEBUG instead # of default WARNING level). (boolean value) debug=true # Print more verbose output (set logging level to INFO instead # of default WARNING level). (boolean value) verbose=true # Log output to standard error. (boolean value) #use_stderr=true # (Optional) Name of log file to output to. If no default is # set, logging will go to stdout. (string value) #log_file=<None> # (Optional) The base directory used for relative --log-file # paths. (string value) #log_dir=<None> # Use syslog for logging. Existing syslog format is DEPRECATED # during I, and will change in J to honor RFC5424. (boolean # value) #use_syslog=false # Syslog facility to receive log lines. (string value) #syslog_log_facility=LOG_USER # List of plugins to be loaded. Sahara preserves the order of # the list when returning it. (list value) plugins=vanilla,hdp,spark,cdh [database] # The SQLAlchemy connection string used to connect to the # database (string value) #connection=<None> connection=mysql+pymysql://sahara:qydcos@controller1:3306/sahara [keystone_authtoken] # Complete public Identity API endpoint (string value) auth_uri=http://controller1:5000/v2.0/ # Complete admin Identity API endpoint. This should specify # the unversioned root endpoint eg. https://localhost:35357/ # (string value) identity_uri=http://controller1:35357/ # Keystone account username (string value) admin_user=sahara # Keystone account password (string value) admin_password=qydcos # Keystone service account tenant name to validate user tokens # (string value) admin_tenant_name=service #[ssl] #key_file=/root/sahara-venv/etc/key.pem

NOTE1: the [connection] section and [keystone_authtoken] section contents should change to your environment.

NOTE2: sahara user must have admin role in tenant service

NOTE3: if you install sahara openstack liberty stable or master, use direct mode to lanch a cluster, by default, sahara use heat, but the cluster will get stuck in spawning state. If you want to use heat, add heat_enable_wait_condition=false in default section

7, Create sahara database and user

mysql -u root –p CREATE DATABASE sahara; GRANT ALL PRIVILEGES ON sahara.* TO 'sahara'@'localhost' IDENTIFIED BY 'qydcos'; GRANT ALL PRIVILEGES ON sahara.* TO 'sahara'@'%' IDENTIFIED BY 'qydcos'; exit;

8, Create sahara user in openstack

openstack user create --domain default --password qydcos sahara openstack role add --project service --user sahara admin

9, crate sahara service in openstack

openstack service create --name sahara --description "Sahara Data Processing" data-processing

10, create sahara endpoint in openstack

openstack endpoint create --region RegionOne data-processing public http://controller1:8386/v1.1/%\(tenant_id\)s openstack endpoint create --region RegionOne data-processing admin http://controller1:8386/v1.1/%\(tenant_id\)s openstack endpoint create --region RegionOne data-processing internal http://controller1:8386/v1.1/%\(tenant_id\)s

11, change my.cnf and restart mysql

max_allowed_packet = 256M

12, reinstall eventlet, otherwise you will get monkey_patch error when you start sahara

sahara-venv/bin/pip uninstall eventlet sahara-venv/bin/pip install eventlet

13, install additional python packages

sahara-venv/bin/pip install debtcollector netaddr monotonic python-dateutil PyMySQL fasteners paste PasteDeploy Routes keystoneauth1 prettytable netifaces enum-compat pycrypto ecdsa functools32 cliff cryptography

14, update sahara datebase

sahara-venv/bin/sahara-db-manage --config-file sahara-venv/etc/sahara.conf upgrade head

15, create policy.json with contents below in sahara-venv/etc/policy.json

{

"default": ""

}

16, create api-paste.ini file in sahara-venv/etc/api-paste.ini

[pipeline:sahara] pipeline = cors request_id acl auth_validator sahara_api [composite:sahara_api] use = egg:Paste#urlmap /: sahara_apiv11 [app:sahara_apiv11] paste.app_factory = sahara.api.middleware.sahara_middleware:Router.factory [filter:cors] paste.filter_factory = oslo_middleware.cors:filter_factory oslo_config_project = sahara latent_allow_headers = X-Auth-Token, X-Identity-Status, X-Roles, X-Service-Catalog, X-User-Id, X-Tenant-Id, X-OpenStack-Request-ID latent_expose_headers = X-Auth-Token, X-Subject-Token, X-Service-Token, X-OpenStack-Request-ID latent_allow_methods = GET, PUT, POST, DELETE, PATCH [filter:request_id] paste.filter_factory = oslo_middleware.request_id:RequestId.factory [filter:acl] paste.filter_factory = keystonemiddleware.auth_token:filter_factory [filter:auth_validator] paste.filter_factory = sahara.api.middleware.auth_valid:AuthValidator.factory [filter:debug] paste.filter_factory = oslo_middleware.debug:Debug.factory

17, start sahara in background

nohup sahara-venv/bin/sahara-all --config-file sahara-venv/etc/sahara.conf &

##sahara log will print into nohup.out file of current directory

18, download sahara-vanila image from http://sahara-files.mirantis.com/images/upstream/liberty/sahara-liberty-vanilla-2.7.1-ubuntu-14.04.qcow2 and register it in openstack glance.

openstack image create "sahara-vanilla-latest-ubuntu" \ --file sahara-liberty-vanilla-2.7.1-ubuntu-14.04.qcow2 \ --disk-format qcow2 --container-format bare \ --public

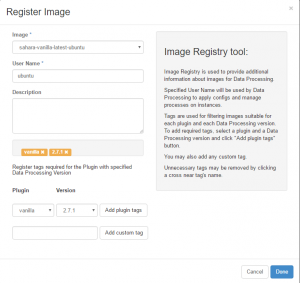

19, register vanilla image in sahara, username: ubuntu with tags: plugin: vanilla, version: 2.7.1

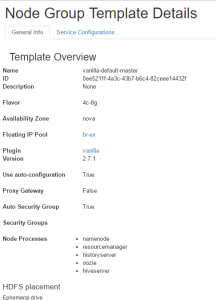

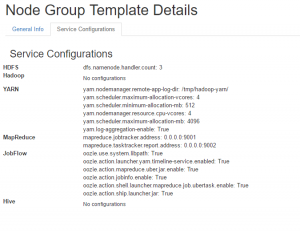

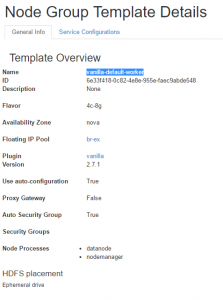

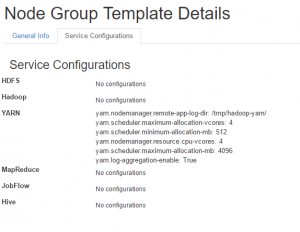

20, create node group template

1, vanilla-default-master

NOTE1: yarn.nodemanager.remote-app-log-dir must be configured in a valid path in hdfs, I use /tmp/hadoop-yarn

NOTE2: yarn.log-aggregation-enable must be true

2,vanilla-default-worker:

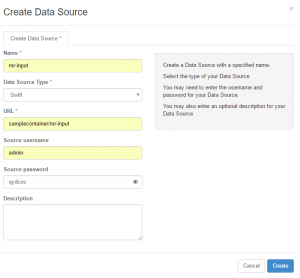

21, create data input file in swift

1, in swift create a container, named: samplecontainer

2, in swift, upload mr-input file into samplecontainer

mr-input file contains whatever you want, just make sure that it is a plain text file

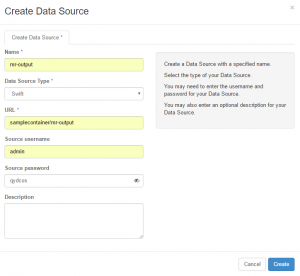

22, create data source in sahara

1, datasource for input file

2, datasource for output

23, create job binary data and job binary in sahara

oozie-examples.tar are bundled within the Oozie distribution in the oozie-examples.tar.gz file.

tar xzf oozie-examples.tar.gz sahara job-binary-data-create --name oozie-examples-4.2.0.jar --file examples/apps/map-reduce/lib/oozie-examples-4.2.0.jar sahara job-binary-create --name oozie-examples-4.2.0.jar --url internal-db://35876d74-8814-41b2-b63a-04543e64d702

NOTE:the internal-db://35876d74-8814-41b2-b63a-04543e64d702 varies from your environment.

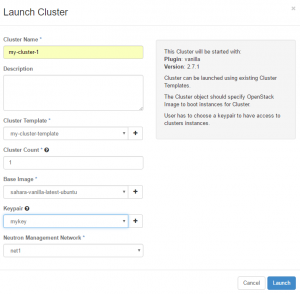

24, create cluster template

1, name it whatever you want, I use: my-cluster-template

2, on node groups tab, select vanilla-default-master, count 1,

vanilla-default-worker, count 2,

3, on hdfs parameters tab, set dfs.replication to 1.

4, others keep on default.

25, lanch a cluster with template created above

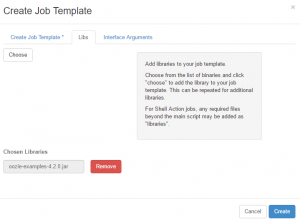

26, create job template

1, name: mr-job

2, type: MapReduce

3, on libs tab, choose oozie-examples-4.2.0.jar

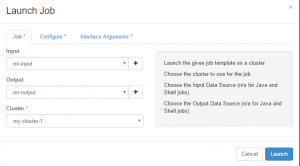

27, run map reduce job on existing cluster

only choose input, output and cluster, sections,and click launch, others do not need to be configured

28, wait and check job result

1, on dashboard, job is in succeeded status

2, in swift samplecontainer/mr-output dir, check job output data.it should be the same content with input file plus numbers in head position of each line.

29, Good luck!

Hi,

i need to run map-reduce job i.e.,i will give some content as input and i will search for one word and output should be that word and how many times it was there in that content.please i need that job binary.please help me in this case.

In step 23, click oozie-examples.tar, i use that job binary file, do not upload it from dashboard, it will always get error.

I use command line to create it.

Hi,

Shaun, How to enable the sahara UI in openstack dashboard. Could you please provide step? Thanks a lot.

i haven’t installed any additional sahara-ui packages, when you registered sahara service and sahara endpoints, the data process panel will appear under project panel in existing dashboard.

hi i installed openstack liberty with two nodes (controller and compute1), now i installed the sahara in the compute node and when i am running the nohup sahara-venv/bin/sahara-all –config-file sahara-venv/etc/sahara.conf &

I am getting this output

2016-09-09 16:39:57.481 3212 INFO sahara.utils.rpc [-] Notifications enabled

2016-09-09 16:39:58.108 3212 INFO sahara.plugins.base [-] Plugin ambari loaded sahara.plugins.ambari.plugin:AmbariPluginProvider

2016-09-09 16:39:58.109 3212 INFO sahara.plugins.base [-] Plugin vanilla loaded sahara.plugins.vanilla.plugin:VanillaProvider

2016-09-09 16:39:58.109 3212 INFO sahara.plugins.base [-] Plugin cdh loaded sahara.plugins.cdh.plugin:CDHPluginProvider

2016-09-09 16:39:58.109 3212 INFO sahara.plugins.base [-] Plugin mapr loaded sahara.plugins.mapr.plugin:MapRPlugin

2016-09-09 16:39:58.109 3212 INFO sahara.plugins.base [-] Plugin storm loaded sahara.plugins.storm.plugin:StormProvider

2016-09-09 16:39:58.109 3212 INFO sahara.plugins.base [-] Plugin spark loaded sahara.plugins.spark.plugin:SparkProvider

2016-09-09 16:39:58.110 3212 INFO sahara.main [-] Sahara all-in-one started

2016-09-09 16:39:58.525 3212 INFO keystonemiddleware.auth_token [-] Starting Keystone auth_token middleware

2016-09-09 16:39:58.528 3212 WARNING keystonemiddleware.auth_token [-] Use of the auth_admin_prefix, auth_host, auth_port, auth_protocol, identity_uri, admin_token, admin_user, admin_password, and admin_tenant_name configuration options was deprecated in the Mitaka release in favor of an auth_plugin and its related options. This class may be removed in a future release.

2016-09-09 16:39:58.531 3212 INFO sahara.main [-] Driver all-in-one successfully loaded

2016-09-09 16:39:58.532 3212 INFO oslo_service.periodic_task [-] Skipping periodic task check_for_zombie_proxy_users because its interval is negative

2016-09-09 16:39:58.532 3212 INFO oslo_service.periodic_task [-] Skipping periodic task heartbeat because its interval is negative

2016-09-09 16:39:58.563 3212 INFO sahara.main [-] Driver heat successfully loaded

2016-09-09 16:39:58.564 3212 INFO sahara.main [-] Driver ssh successfully loaded

2016-09-09 16:39:58.566 3212 INFO oslo.service.wsgi [-] sahara-all listening on :8387

2016-09-09 16:39:58.567 3212 INFO oslo_service.service [-] Starting 1 workers

2016-09-09 16:39:58.571 3212 WARNING oslo_config.cfg [-] Option “rabbit_host” from group “oslo_messaging_rabbit” is deprecated for removal. Its value may be silently ignored in the future.

2016-09-09 16:39:58.571 3212 WARNING oslo_config.cfg [-] Option “rabbit_password” from group “oslo_messaging_rabbit” is deprecated for removal. Its value may be silently ignored in the future.

2016-09-09 16:39:58.572 3212 WARNING oslo_config.cfg [-] Option “rabbit_userid” from group “oslo_messaging_rabbit” is deprecated for removal. Its value may be silently ignored in the future.

2016-09-09 16:39:58.572 3212 WARNING oslo_config.cfg [-] Option “rabbit_virtual_host” from group “oslo_messaging_rabbit” is deprecated for removal. Its value may be silently ignored in the future.

How to proceed further

From the log you pasted, there is no error occurred, try to move to next step.

hi,

I am not getting the error “Unable to process plugin tags ” when i entered into register image. please help me

do you have this log:

2016-09-14 15:42:42.797 4206 INFO sahara.utils.wsgi [-] (4206) wsgi starting up on http://0.0.0.0:8386

2, your sahara-api is listening on port 8387, and what’s the endpoint your created in keystone? 8386 or 8387?

in step 10, i use 8386, so my endpoint port is 8386.

Hi Shaun ,

I followed the steps as given EXCEPT i installed sahara in the COMPUTE node…..all seemed to work BUT when i try to register the sahara image in the dashboard(installed in controller node) , it does not display the PLUGIN & VERSION.

Can you please suggest any ways to rectify it…..(can i even install sahara in compute or should it be strictly installed in controller only)

Also alternatively , if i wish to install sahara in a SEPARATE NODE itself what are the requirements and which parts of the instructions should i follow ?

Thank you

do you have the correct packages installed?

for plugin vanilla, the version and plugin name comes from directory name of

sahara-venv/local/lib/python2.7/site-packages/sahara/plugins/vanilla/, dir name with format v2_*_*. checkout these files.

sahara can be installed on different nodes. typically sahara-api in controller node, sahara-engine in other node.

sahara-all include both of them.

if you install sahara on compute node, or whatever, steps above is the same .

endpoint is the ip address of sahara-api service node.

in api node, start sahara-api service with sahara-venv/bin/sahara-api –config-file sahara-venv/etc/sahara.conf

in other node, start shara-engine service with sahara-venv/bin/sahara-engine –config-file sahara-venv/etc/sahara.conf

i take a all in one installation.

hi Shaun

I have installed step by step of ur installation when i am create user in keyston i am getting error 501 . I was take the source credentials of admin and creating user of sahara entry in keyston . I using mitaka in ubuntu 14.04 . The problem is keyston version . please help for.

paste log information of Apache error log, what about creating other users?

Hi,

While trying to LAUNCH A CLUSTER with the template that i created using your instructions , i got the following error “default-master is missing ‘floating_ip_pool’ “. What seems to be the problem ?

please specify floating ip pool when creating master node group template.

in step 20, i use floating ip pool br-ex.

i am having one compute node. This is the hyper visor detail:

Hostname compute1 Type QEMU VCPUs (used) 1 VCPUs (total) 4 RAM (used) 1GB RAM (total) 1.8GB Local Storage (used) 1GB Local Storage (total) 455GB Instances 1

when i am launching the cluster i am getting the error.

have you created external network in openstack ? or what networking mode you use ? the floating ip pool is external network name

i am having a floating ip pool named public, when i type :

nova floating-ip-pool-list , it gives output:

+————+

| name |

+————+

| public |

+————+

so it means that i have a floating ip pool named ‘public’

Also i am specifying ‘public’ as per step 20 but still it is showing the error “default-master is missing ‘floating_ip_pool’ “. What seems to be the problem ?

have you set use_floating_ips=true in default section of sahara.conf file?

yes, i have set use_floating_ips=true in sahara.conf…

it’s weird, i have no idea what the problem is in your environment, maybe more information is needed, you can check out this page https://ask.openstack.org/en/question/47507/sahara-floating-ip-issue-floating-ip-not-found/ if you have the same problem.

Is it possible to create Data source without Swift? I didn’t install Swift on Openstack because of storage problem.

it is okay.

Hi,

I’m trying to install sahara project on OpenStack kilo version, but not getting any documentation . can I get some help.

yep

Hi Shaun,

I am trying to setup sahara in liberty version on VENV and followed you instruction, when starting the sahara services, I am receiving below errors, I am i missing any python module locally in VENV?

Traceback (most recent call last):

File “sahara-venv/bin/sahara-all”, line 7, in

from sahara.cli.sahara_all import main

File “/root/sahara-venv/local/lib/python2.7/site-packages/sahara/cli/sahara_all.py”, line 45, in

import sahara.main as server

File “/root/sahara-venv/local/lib/python2.7/site-packages/sahara/main.py”, line 31, in

from sahara.api import v10 as api_v10

File “/root/sahara-venv/local/lib/python2.7/site-packages/sahara/api/v10.py”, line 19, in

from sahara.service import api

File “/root/sahara-venv/local/lib/python2.7/site-packages/sahara/service/api.py”, line 22, in

from sahara import conductor as c

File “/root/sahara-venv/local/lib/python2.7/site-packages/sahara/conductor/__init__.py”, line 18, in

from sahara.conductor import api as conductor_api

File “/root/sahara-venv/local/lib/python2.7/site-packages/sahara/conductor/api.py”, line 22, in

from sahara.conductor import resource as r

File “/root/sahara-venv/local/lib/python2.7/site-packages/sahara/conductor/resource.py”, line 30, in

from sahara.conductor import objects

File “/root/sahara-venv/local/lib/python2.7/site-packages/sahara/conductor/objects.py”, line 33, in

CONF.import_opt(‘node_domain’, ‘sahara.config’)

File “/root/sahara-venv/local/lib/python2.7/site-packages/oslo_config/cfg.py”, line 2723, in import_opt

__import__(module_str)

File “/root/sahara-venv/local/lib/python2.7/site-packages/sahara/config.py”, line 25, in

from sahara.topology import topology_helper

File “/root/sahara-venv/local/lib/python2.7/site-packages/sahara/topology/topology_helper.py”, line 26, in

from sahara.utils.openstack import nova

File “/root/sahara-venv/local/lib/python2.7/site-packages/sahara/utils/openstack/nova.py”, line 22, in

from sahara.utils.openstack import images

File “/root/sahara-venv/local/lib/python2.7/site-packages/sahara/utils/openstack/images.py”, line 73, in

class SaharaImageManager(images.ImageManager):

AttributeError: ‘module’ object has no attribute ‘ImageManager’